It’s not an exaggeration to say AI represents one of the most pressing questions facing humanity. While the fears of AI often revolve around the emergence of an amoral Superintelligence, today I want to explore a simpler idea.

The real problem facing us is not getting AI “right” but getting human’s wrong.

A few years ago, I saw a lecture by a CEO whose company was pioneering the ability of computers to recognize human emotions. They did this based on the “activation” of muscles in the face. The link between facial expressions and emotions is, of course, something we humans all know how to read. But some researchers claim they can go beyond the gross characteristics of “smile” vs. “frown” to map out specific facial muscles and their links to specific emotional states.

The AI built by the CEO could identify positions of key facial muscles and use them to infer the emotional state of the AI’s human user. The machine would then respond in ways that take that specific emotional state into account.

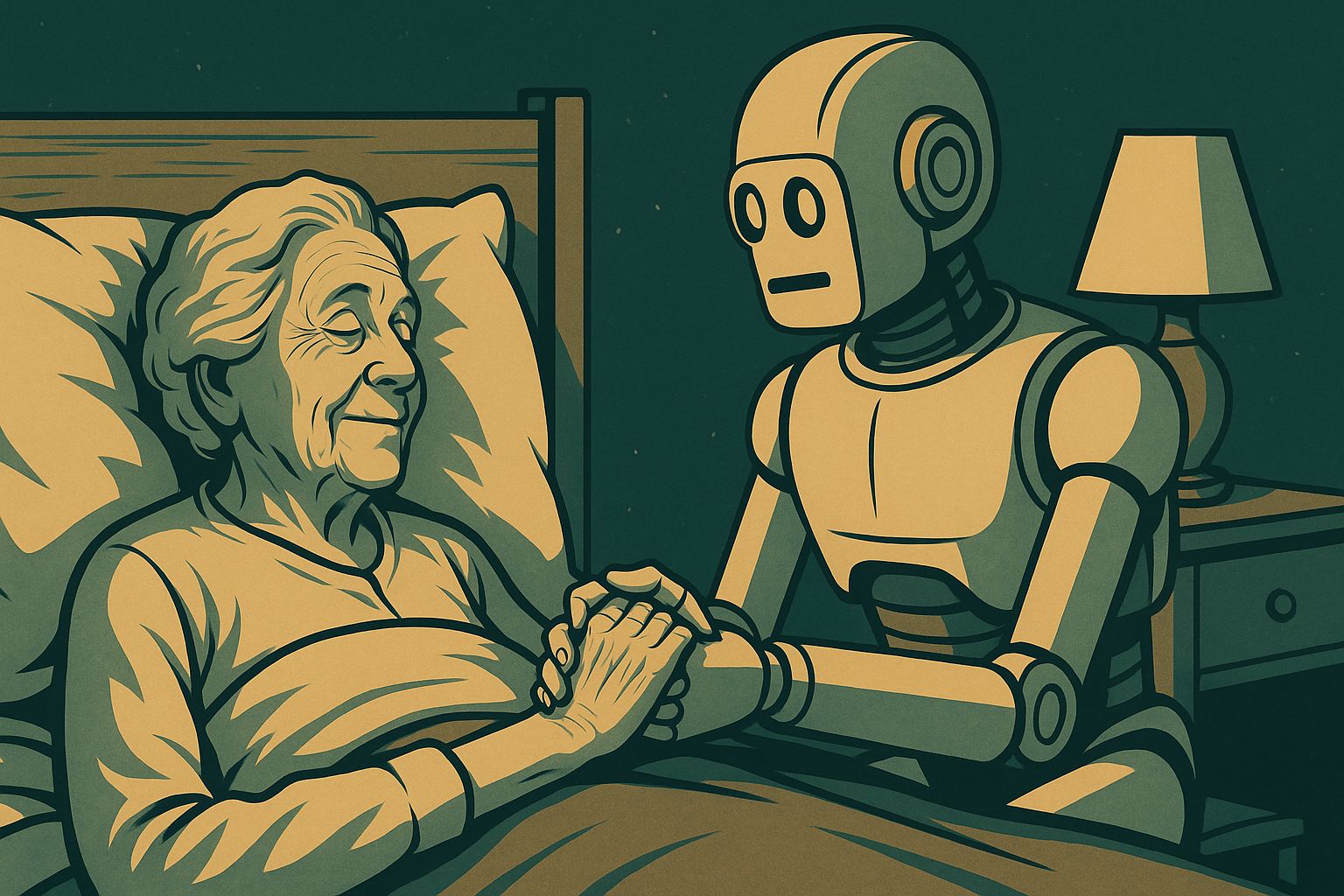

One potential application for the tech was “emotional robots” for the elderly. Having a machine that could converse in an empathic way would give older people without much human contact “someone” to spend time with. I was pretty horrified. Despite how remarkable the tech might be, the whole idea seemed like a trap we were preparing for ourselves.

The assumption that lies behind the CEO’s “emotional computing” is that facial states capture emotions i.e. from the computers point of view they are emotions. At the root of this equation is a reduction of human experience to neural programming whose outward manifestation can be captured algorithmically.

My point here is not to begin an argument about the nature of emotions and consciousness. Instead, it’s to see how much AI technology already holds built-in assumptions has about what we are as humans (i.e. a kind of biological machine). As this tech gets deployed in our world, it will also reframe it in ways that will be impossible to escape from. If you want an example, think about smart phones. Can you really exist in modern society without one?

So, imagine we get robots that keep lonely older people company. That’s a good thing right? But won’t that also relieve us from questioning how we ended up in a society that warehouses the elderly because we don’t know what else to do with them? Might there be other, more humane and human, solutions than robots (that will be sold at a steep price by the robot companies).

At the root of it all, is the fact that “emotion data” is not the same thing as the real, vivid, present, enacted emotional experiences we have as humans. Our emotions are not our faces or our voices. They aren’t data. They can’t be pulled out like a thread, one by one, from the fabric of our being.

But once a technology that treats emotions as data becomes pervasive, we may soon find that data is the only aspect of emotion we recognize or value. Once billions of dollars flood into that equation then we become trapped in a pervasive technology that flattens our experiences and reduces our lives. And that, more than superintelligent robot overlords, is what scares me most about AI.

PS. Is emotional computing danger or not? Leave a comment on the website version of the post or email me at [email protected]

PSS. I was not able to have this post proof-read so please excuse any typos.

— Adam Frank 🚀